Chapter: | ASEAN | Singapore | India | Australia | New Zealand |

ASEAN

The 10 member countries within the Association of Southeast Asian Nations are New Southbound Policy partner countries. ASEAN aims to maintain social and political stability through a collective regional security approach while also fostering economic integration and trade development.

During the 3rd ASEAN Digital Ministers Meeting (ADGMIN) held in February 2023, all attending members recognized the importance of enhancing cooperation and planning in artificial intelligence (AI) and digital skills. They acknowledged that such efforts are essential to bridge talent and skill gaps within the ASEAN region. Embracing emerging technologies, such as leveraging blockchain technology and AI, was highlighted as crucial for facilitating digital transformation. This involved adjusting relevant policy trends and regulations to ensure that emerging technologies progress towards an innovative, responsible, and secure ecosystem.

Moreover, there was a confirmation to implement the ASEAN Strategy Guidance on Artificial Intelligence and the completion of the Artificial Intelligence Landscape Study Report in the future to guide and solidify the initiatives in AI and digital workforce within the ASEAN framework.

Singapore will host the 4th ASEAN Digital Ministers Meeting in January next year. Hosting the meeting is in line with the Ministry of Communication & Information (MCI) of Singapore’s July 2023 parliamentary report that stated the country’s desire to collaborate with ASEAN member countries to formulate an ASEAN Guide on AI Governance and Ethics.

Singapore

In both 2021 and 2022, Singapore ranked second in the world on the Government AI Readiness Index. Singapore demonstrated outstanding application of AI in three major areas: government entities, technical departments, and data and infrastructure. Not only does Singapore boast the most comprehensive AI readiness among Asian countries, but it was also the first country in Southeast Asia to publish a national AI strategy.

As early as November 2014, Singapore's Prime Minister introduced the concept of a "Smart Nation," envisioning a forward-looking landscape encompassing a digital government, digital society, and digital economy. Since October 2023, the MCI has been charging of managing and integrating the former Smart Nation and Digital Government Group (SNDGG), which was previously responsible for overseeing the Smart Nation strategy, digitalization of government services, and the development and application of digital technologies and capabilities. This consolidation will enhance organizational capabilities, consolidate resources, foster interdepartmental collaboration, and effectively execute government technology policies. It aims to address challenges posed by digital development while leading the way in the development of the digital economy, digital readiness, and information security.

In November 2019, Singapore launched the National Artificial Intelligence Strategy, which aims to drive AI innovation and applications by developing and deploying scalable and impactful AI solutions. The strategy focuses on high-value areas that benefit both citizens and industries. By targeting key sectors, the goal is for Singapore to emerge as a leader in AI by 2030.

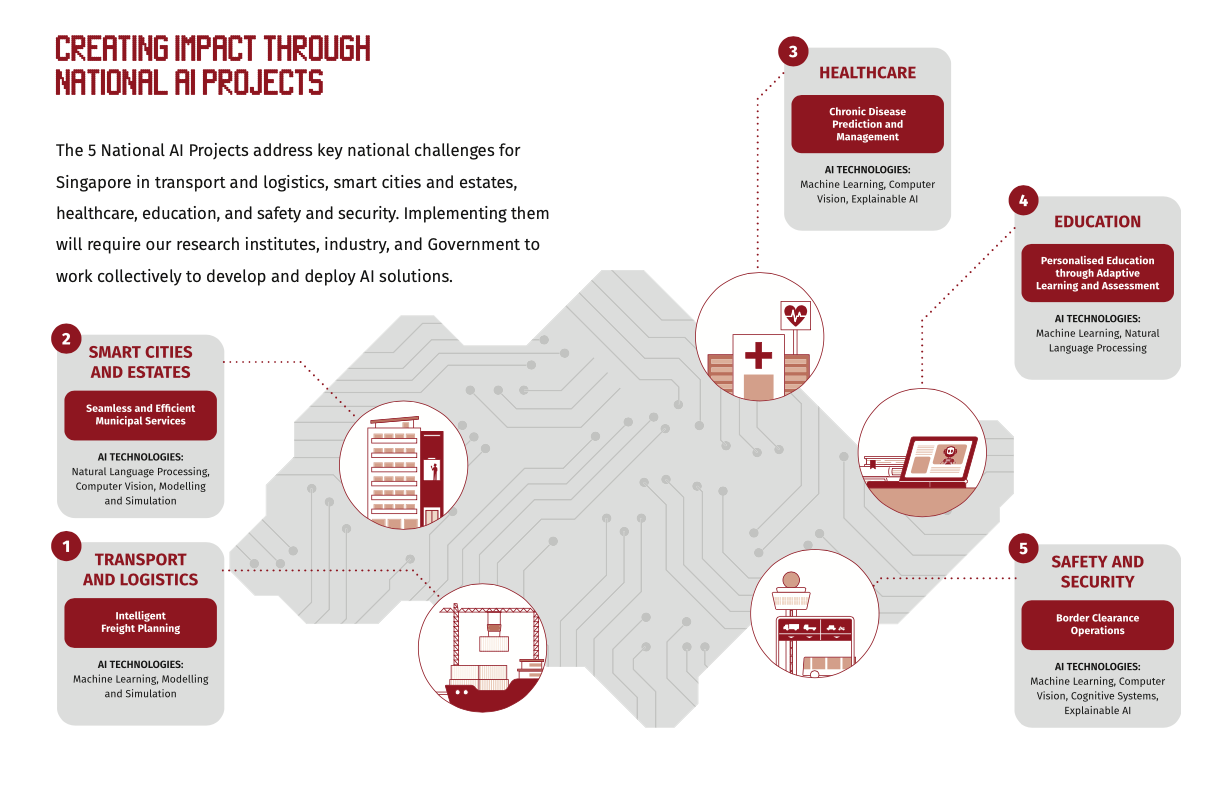

The 5 National AI Projects address key national challenges for Singapore

(Source & Credit: Smart Nation Singapore)

The National AI Projects within the Strategy focus on five key areas throughout Singapore: Transport & Logistics, Smart Cities & Estates, Healthcare, Education, and Safety & Security. Additionally, to demonstrate the value and benefits of artificial intelligence, the strategy explicitly outlines five critical elements of Ecosystem Enablers for AI:

- Establishment of collaborations between industry, government, and research entities (Industry-Government-Research Triple Helix Partnerships).

- Development of domestic AI talent and educational programs.

- Strengthening a robust cross-agency data architecture.

- Creation of an advanced and trusted environment.

- Collaboration with international partners.

MCI Minister Josephine Teo said, "Artificial intelligence and machine learning have shaped people's daily experiences and influenced decisions. The government should strive to provide better services to citizens through AI." Since the launch of the National Artificial Intelligence Strategy, over 85% of government agencies in the country have adopted at least one AI technology to enhance productivity.

Additionally, the Singaporean government plans to utilize Large Language Models (LLM) to integrate social services and government subsidy programs, automatically screening vast amounts of information to match project requirements. This aims to cut down government costs and time while enhancing the efficiency of policy formulation and implementation. In addition, Singapore has introduced the certification tool "AI Verify" to ensure the security and reliability of artificial intelligence systems and assess whether they align with the public interest.

In May 2023, Google Cloud collaborated with the Government Technology Agency of Singapore (GovTech) to launch the Artificial Intelligence Government Cloud Cluster (AGCC). This initiative aims to assist government agencies in utilizing AI to enhance efficiency and responsibly deploy AI applications. As part of this collaboration, they designed several Digital Academy Programs to establish internal expertise within various government agencies in data science, AI knowledge, and the development of AI innovation strategies. The goal is to implement best practices in data governance.

In July, Singapore also introduced the AI Trailblazer Initiative, which aims to provide public officials with AI and machine learning to build governmental AI capabilities. Google provided AI models and development tools to the Singaporean government and organizational units, enabling them to construct and test their generative AI solutions within a cloud environment. This initiative represents the first step by Google Cloud in assisting Singapore in the development and application of AI. Plans include ongoing initiatives such as talent development in AI, fostering local AI startups, and promoting responsible AI deployment for enterprises.

Furthermore, Singapore has launched the AI Apprenticeship Programme (AIAP) to comprehensively develop local AI talent. This program offers tailored courses for different groups, such as AI for Everyone (AI4E)®, AI for Kids (AI4K)®, AI for Students (AI4S)®, and AI for Industry (AI4I)®. Training and internships related to AI are eligible for government subsidies as part of this initiative.

The Singaporean government is not only dedicated to fostering AI development and nurturing talent but also emphasizes the formulation of AI-related policies, regulations, and guidelines across various domains. Initiatives include the Digital Economy Framework for Action, the National AI Programme in Finance, the Services & Digital Economy Technology Roadmap & Services 4.0, AI in Healthcare Guidelines, and the establishment of the Advisory Council on the Ethical Use of AI and Data.

In conclusion, Singapore stands out in digital governance by employing innovative measures and a balanced approach. Coupled with a favourable business and legal environment, Singapore has fostered exemplary collaborations between the public and private sectors, which have demonstrated innovation and efficiency in AI development. During the 4th ASEAN Digital Ministers Meeting, the region hopes that Singapore will share its experience in AI policy formulation and assist in its implementation.

India

In June 2018, the Indian government introduced the National Strategy for Artificial Intelligence to develop and research an AI ecosystem under the motto of #AIforAll. This strategy aims to promote AI applications and address the technological gaps within the population. The National Institution for Transforming India (NITI Aayog) has been entrusted with implementing this strategy by focusing on five major domains: agriculture, healthcare, education, smart cities and infrastructure, and intelligent transportation. NITI Aayog has adopted three execution strategies:

- Conducting exploratory Proof of Concept AI projects across various sectors.

- Building a vibrant AI ecosystem.

- Actively collaborating with domain experts and stakeholders.

This strategy highlights that to benefit from large-scale AI deployment, India needs to address current bottlenecks in AI development, such as the lack of comprehensive expertise in AI research and application, support for the data ecosystem, adopting collaborative approaches, concerns regarding privacy and security, and the high cost and low awareness of AI adoption. To overcome these challenges, India has proposed the establishment of Centres of Research Excellence (CORE) to nurture AI talent and capabilities. These centres aim to drive core technology advancements through new knowledge in the field.

In May 2022, India's Ministry of Electronics and Information Technology (MeitY) introduced the National Data Governance Framework (Draft) (NDGFP). This framework is based on the transformation and modernization of government data collection, management processes, and systems. The Indian government aims to establish a standardized approach to data management, enhancing the form of government data governance, access, and usage. The goal is to improve the effectiveness of government data management, decision-making, project evaluation, and efficiency of service. Additionally, this framework is positioned as a catalyst for India's digital economy, serving as a driving force for artificial intelligence, the analytics ecosystem, and the startup ecosystem.

In July 2023, India's AI and Meta signed a memorandum of understanding (MoU) to establish a collaborative relationship in the field of AI and emerging technologies. This initiative aims to apply Meta's open-source AI models to India's AI ecosystem, which may potentially benefit a larger section of the population. It is expected that India's leadership in digital matters, along with Meta's open approach to AI innovation, will complement each other. Furthermore, IndiaAI, which was launched by the Ministry of Electronics and Information Technology, aggregates national AI-related policies and research in the country’s largest database. Through close collaboration with Meta, this program aims to provide technological advancements to industry, government, and the research community. This collaboration has the potential to bring about substantial social and economic opportunities, strengthen India's digital leadership, and ensure that AI is tailored to India's unique needs.

In 2023, India, as the world's most populous nation, has a total of 122 languages, with 22 recognized as official languages according to the Indian Constitution. As part of India's collaboration with Meta, one of their joint initiatives aims to build Indian datasets focusing on "Indian languages." Their objective is to enable translation and large-scale language models by prioritizing low-resource languages and employing Meta's AI language models like Llama, Massively Multilingual Speech (MMS), and No Language Left Behind (NLLB). This initiative holds the promise of enhancing social inclusivity and bolstering government service capabilities. Additionally, both parties are considering establishing a Centre of Excellence to elevate India's expertise in AI and emerging technologies, which would contribute to the development of AI talent within India.

In May 2023, Taiwan and India held a bilateral workshop on cybersecurity, called the Indo-Taiwan Bilateral Workshop on Cyber Security, at the Indian Institute of Technology Jammu (IIT Jammu) to address various aspects of digital security. Discussions on AI included exploring how AI could enhance cybersecurity defence capabilities and methods to defend against AI-based attacks. Both India and Taiwan face external cyber threats and misinformation, which have prompted a joint effort to establish cybersecurity defence using AI. Representatives from Taiwan attending the workshop included Taipei Economic and Cultural Center in New Delhi Science and Technology Division Director Chin-Tsan Wang 王金燦, along with seven cybersecurity experts from universities such as National Chung Cheng University, National Yang Ming Chiao Tung University, National Taiwan University of Science and Technology, and National Cheng Kung University. This workshop was organized based on a decision made during the 2022 11th India-Taiwan Joint Committee Meeting on Cooperation in Science and Technology between the National Science and Technology Council of Taiwan and India's Department of Science & Technology (DST).

Australia

In June 2021, the Australian government issued the Artificial Intelligence Action Plan, which focused on four major areas: (1) Transforming Australian businesses to adapt to AI development; (2) Establishing itself as a global hub for AI talent; (3) Utilizing cutting-edge AI technology to address national challenges; and (4) Leading as a responsible and inclusive AI world leader. The goal is to become a world leader capable of developing secure, reliable, and responsible AI. This action aims to enhance competitiveness, achieve industrial transformation, create domestic employment opportunities, and stimulate economic growth.

The European Union (EU) aims to ensure that AI products and services within the market align with EU values by moving from encouraging self-regulation guidelines to formulating market surveillance standards. In June 2023, the European Parliament approved the world's first AI legislation, the EU Artificial Intelligence Act. Its primary task is to ensure the safety, transparency, traceability, non-discrimination, and environmental friendliness of AI systems within the EU. Additionally, the legislation emphasizes that these systems should be "human supervised" rather than autonomously managed to prevent harmful consequences. The Act also establishes different regulations for various risk levels, categorized as "Unacceptable Risk," "High Risk," and "Limited Risk."

Specific measures outlined include the establishment of the National AI Centre, which is aimed at consolidating Australia's AI expertise and capabilities to address obstacles faced by small and medium-sized enterprises (SMEs) in adapting to, developing, and using AI technologies. Additionally, the creation of a Capability Centre intends to provide pathways for talent, tools, and market demand recommendations for AI transformation, encouraging businesses to confidently adopt AI technologies to enhance productivity, commercialize products, increase competitiveness, and create job opportunities. Moreover, initiatives like the Next Generation AI Graduates Program aim to cultivate and attract talent domestically and internationally, which aims to position Australia as a hub for AI talent. Part of this strategy also includes AI defence investments and the utilization of cutting-edge AI technologies to build solutions such as the Machine Learning & Artificial Intelligence Future Science Platform (MLAI FSP) and the Medical Research Future Fund. Furthermore, Australia is implementing Australia’s AI Ethics Framework to ensure human rights are protected as AI develops.

Australia is not only actively promoting AI development through government initiatives but also engaging in AI technology collaborations with the private sector. Furthermore, it is employing AI across various domains to enhance and resolve social issues. For instance, in skills education, technology companies have introduced training programs and certifications in digital technologies. There has also been a particular focus on closing the digital divide by supporting women and Indigenous Australians.

Funding from multinational corporations has been a significant driver in this regard, such as the Digital Future Initiative, which Google launched in November 2021. This initiative entails a five-year investment in Australian infrastructure, research, and collaboration. Additionally, Google invested in the National AI Centre of the Commonwealth Scientific and Industrial Research Organisation (CSIRO). Collaboration between the two parties includes:

- Establishing Google Research Australia in collaboration with local research entities and other Google Research hubs to collectively explore AI/ML solutions by addressing critical Australian issues such as natural disasters, marine conservation, mental and physical health, and energy challenges. Additionally, this initiative aims to assist CSIRO in utilizing AI to analyze underwater biological images, such as identifying crown-of-thorns starfish, a species that causes damage to coral reefs. Compared to traditional methods, AI aids scientists in more effective, frequent, and accurate monitoring of the Great Barrier Reef's coral ecosystem. These research efforts have been extended to Indonesian and Fijian waters.

- In 2020, Google collaborated with the World Wide Fund for Nature (WWF) to launch the Eyes on Recovery Project, which deployed cameras in Australian wildfire areas. Google developed the Wildlife Insights AI model, which processed over 7 million images capturing over 150 species recorded in the disaster zone. Using machine learning, species identification accuracy averaged slightly over 90%, enabling researchers to track the recovery status of wildlife species. This technology will also be used in monitoring invasive and endangered species rehabilitation efforts in the future.

- Google collaborated with the Queensland University of Technology (QUT) to analyze 17 million hours of archived bird vocalizations. They shifted from traditional manual methods to AI-based identification and monitoring of bird species. By employing automated audio detection, scientists can now monitor bird populations more accurately and efficiently, enabling better land management decisions and conservation measures.

- Google Research Australia collaborated with the National Acoustic Laboratories (NAL) and four other organizations to utilize AI/ML for the development of hearing and communication technologies. They established individualized hearing models tailored to specific hearing needs, identifying and filtering environmental sound sources. This enhanced hearing assistive technology aims to facilitate better accessibility for individuals with hearing impairments, enabling them to engage more effectively with the world.

Google Australia Managing Director Mel Silva Giving a Speech

(Souce & Credit: Google Australia Blog)

State and territory governments in Australia have also implemented corresponding measures for AI development. For instance, in 2022, the Victorian government announced the establishment of the Australian AI Centre of Excellence (CoE) which brings together academic and industry partners to provide research and development, as well as business and educational programs. Similarly, the Queensland government set up the Queensland AI Hub (QLD AI Hub), which aimed at connecting industry, government, academia, and research, attracting AI talent, generating employment opportunities, and fostering economic growth.

An important initiative worth noting is the implementation of the AI Assurance Framework by the New South Wales government. All government departments and agencies in the state, when developing, deploying, or implementing AI projects, are required to reference the Artificial Intelligence Strategy progressively review processes and strictly adhere to the five principles outlined in the AI Ethics Policy Framework. According to the Framework, government employees should always consider the limitations and risks associated with AI. Thus, the deployment and use of AI should always prioritize safety and data privacy concerns. The Framework also mandates project personnel and policymakers to conduct a "self-assessment" of AI by investigating categories such as benefits, risks, harm, fairness, privacy security, transparency, and accountability. Regular review is considered a best practice for mitigating potential risks and ensuring protection. The risk and harm assessments in the assurance framework align with the risk level specifications outlined in the European Union's Artificial Intelligence Act.

Other Australian government entities have also presented relative statements and policy stances concerning AI trends. For instance, the Department of Industry, Science and Resources published the Safe and Responsible AI in Australia: Discussion Paper. Additionally, the eSafety Commissioner released the Tech Trends Position Statement: Generative AI, while the Australian Research Council (ARC) issued the Policy on Use of Generative Artificial Intelligence in the ARC’s Grants Programs.

The Australian government has not only looked towards the future but has also undertaken reforms to the existing foundational digital and privacy laws. This includes updates to the Privacy Act 1988 and the proposal of the Privacy Legislation Amendment (Enforcement and Other Measures) Bill 2022. Additionally, the Data Availability and Transparency Act 2022 has been established to create a data-sharing system within public sectors. This system allows research institutions to engage in AI research and development. If personnel fail to meet the requirements, their data access privileges are revoked. These initiatives aim to provide flexibility while ensuring trust and security.

New Zealand

Although the New Zealand government has not yet issued a national-level AI policy, glimpses of its AI layout and direction can still be observed from other government digital policies and strategies. For instance, in September 2022, New Zealand released The Digital Strategy for Aotearoa, which aims to establish a digital system that is trustworthy, inclusive, and fosters growth. Within this strategy, "trust" is highlighted, emphasizing that digital and data systems should be fair, transparent, secure, and accountable. Additionally, the strategy prioritizes meeting the needs of the people and respecting the principles of the Treaty of Waitangi. In terms of ethnic issues, the New Zealand government has proposed corresponding measures such as Māori Data Governance to facilitate collaboration between the Māori people and the government in designing data governance models. Such collaboration aims to enhance the trust of Māori people in official data systems.

It is worth noting that in July 2020, the New Zealand government introduced the Algorithm Charter for Aotearoa New Zealand, which stands as the world's first charter for transparency and accountability in data and algorithm use. Referencing five policies and frameworks, which include the Government Use of Artificial Intelligence in New Zealand and Trustworthy AI in Aotearoa - AI Principles, the charter emphasizes the value of algorithms in government agencies using data to enhance public services. It standardizes business processes and addresses big data to compensate for human decision-making blind spots. The charter stresses the need for comprehensive design and usage of algorithms to avoid perpetuating or magnifying biases.

One of the other commitments in the charter is "transparency," specifically explaining how data is collected, protected, and stored, the decision-making processes of algorithms, and integrating Māori perspectives to promote Māori involvement. Furthermore, "accountability" highlights that algorithms should be overseen by humans.

In July 2023, the New Zealand Ministry of Internal Affairs, National Cyber Security Centre, and Statistics New Zealand jointly released “Initial Advice on Generative Artificial Intelligence in the Public Service”. This serves as a guideline for the use of Generative Artificial Intelligence (GenAI) in public services. It stipulates that GenAI should not be used to process data classified as confidential or higher to avoid data breaches that could impact societal security and lead to potentially irreversible effects on the economy and public services.

The advice specifically highlights that the free version of GenAI tools carries higher risks compared to the paid version and should be avoided for deployment within agencies to prevent becoming "Shadow IT." Before using GenAI, a comprehensive assessment is recommended. Additionally, there should be active management of AI used within agencies to review their decision-making processes and outcomes.

Based on the currently available information, New Zealand places particular emphasis on transparency, accountability, and inclusivity in digital technology, which is believed to contribute significantly to laying a strong foundation for the country's AI strategy. Actively inviting collaboration and involvement from the Māori people in technological development vividly demonstrates that technological advancement can embody diverse values.

Reference

ASEAN

Singapore

India

Australia

New Zealand